Sam Altman Is the Oppenheimer of Our Age

https://nymag.com/intelligencer/article/sam-altman-artificial-intelligence-openai-profile.html

This article was featured in One Great Story, New York’s reading recommendation newsletter. Sign up here to get it nightly.

This past spring, Sam Altman, the 38-year-old CEO of OpenAI, sat down with Silicon Valley’s favorite Buddhist monk, Jack Kornfield. This was at Wisdom 2.0, a low-stakes event at San Francisco’s Yerba Buena Center for the Arts, a forum dedicated to merging wisdom and “the great technologies of our age.” The two men occupied huge white upholstered chairs on a dark mandala-backed stage. Even the moderator seemed confused by Altman’s presence.

“What brought you here?” he asked.

“Yeah, um, look,” Altman said. “I’m definitely interested in this topic” — officially, mindfulness and AI. “But, ah, meeting Jack has been one of the great joys of my life. I’d be delighted to come hang out with Jack for literally any topic.”

It was only when Kornfield — who is 78 and whose books, including The Wise Heart, have sold more than a million copies — made his introductory remarks that the agenda became clear.

“My experience is that Sam … the language I’d like to use is that he’s very much a servant leader.” Kornfield was here to testify to the excellence of Altman’s character. He would answer the question that’s been plaguing a lot of us: How safe should we feel with Altman, given that this relatively young man in charcoal Chelsea boots and a gray waffle henley appears to be controlling how AI will enter our world?

Kornfield said he had known Altman for several years. They meditated together. They explored the question: How could Altman “build in values — the bodhisattva vows, to care for all beings”? How could compassion and care “be programmed in in some way, in the deepest way?”

Throughout Kornfield’s remarks, Altman sat with his legs uncrossed, his hands folded in his lap, his posture impressive, his face arranged in a manner determined to convey patience (though his face also made it clear patience is not his natural state). “I am going to embarrass you,” Kornfield warned him. Then the monk once again addressed the crowd: “He has a pure heart.”

For much of the rest of the panel, Altman meandered through his talking points. He knows people are scared of AI, and he thinks we should be scared. So he feels a moral responsibility to show up and answer questions. “It would be super-unreasonable not to,” he said. He believes we need to work together, as a species, to decide what AI should and should not do.

By Altman’s own assessment — discernible in his many blog posts, podcasts, and video events — we should feel good but not great about him as our AI leader. As he understands himself, he’s a plenty-smart-but-not-genius “technology brother” with an Icarus streak and a few outlier traits. First, he possesses, he has said, “an absolutely delusional level of self-confidence.” Second, he commands a prophetic grasp of “the arc of technology and societal change on a long time horizon.” Third, as a Jew, he is both optimistic and expecting the worst. Fourth, he’s superb at assessing risk because his brain doesn’t get caught up in what other people think.

On the downside: He’s neither emotionally nor demographically suited for the role into which he’s been thrust. “There could be someone who enjoyed it more,” he admitted on the Lex Fridman Podcast in March. “There could be someone who’s much more charismatic.” He’s aware that he’s “pretty disconnected from the reality of life for most people.” He is also, on occasion, tone-deaf. For instance, like many in the tech bubble, Altman uses the phrase “median human,” as in, “For me, AGI” — artificial general intelligence — “is the equivalent of a median human that you could hire as a co-worker.”

At Yerba Buena, the moderator pressed Altman: How did he plan to assign values to his AI?

One idea, Altman said, would be to gather up “as much of humanity as we can” and come to a global consensus. You know: Decide together that “these are the value systems to put in, these are the limits of what the system should never do.”

The audience grew quiet.

“Another thing I would take is for Jack” — Kornfield — “to just write down ten pages of ‘Here’s what the collective value should be, and here’s how we’ll have the system do that.’ That’d be pretty good.”

The audience got quieter still.

Altman wasn’t sure if the revolution he was leading would, in the fullness of history, be considered a technological or societal one. He believed it would “be bigger than a standard technological revolution.” Yet he also knew, having spent his entire adult life around tech founders, that “it’s always annoying to say ‘This time it’s different’ or ‘You know, my thing is supercool.’” The revolution was inevitable; he felt sure about that. At a minimum, AI will upend politics (deep fakes are already a major concern in the 2024 presidential election), labor (AI has been at the heart of the Hollywood writers’ strike), civil rights, surveillance, economic inequality, the military, and education. Altman’s power, and how he’ll use it, is all of our problem now.

Yet it can be hard to parse who Altman is, really; how much we should trust him; and the extent to which he’s integrating others’ concerns, even when he’s on a stage with the intention of quelling them. Altman said he would try to slow the revolution down as much as he could. Still, he told the assembled, he believed that it would be okay. Or likely be okay. We — a tiny word with royal overtones that was doing a lot of work in his rhetoric — should just “decide what we want, decide we’re going to enforce it, and accept the fact that the future is going to be very different and probably wonderfully better.”

This line did not go over well either.

“A lot of nervous laughter,” Altman noted.

Then he waved his hands and shrugged. “I can lie to you and say, ‘Oh, we can totally stop it.’ But I think this is …”

Altman did not complete this thought, so we picked the conversation back up in late August at the OpenAI office on Bryant Street in San Francisco. Outside, on the street, is a neocapitalist yard sale: driverless cars, dogs lying in the sun beside sidewalk tents, a bus depot for a failing public-transportation system, stores serving $6 lattes. Inside, OpenAI is low-key kinda-bland tech corporate: Please help yourself to a Pellegrino from the mini-fridge or a sticker of our logo.

In person, Altman is more charming, more earnest, calmer, and goofier — more in his body — than one would expect. He’s likable. His hair is flecked with gray. He wore the same waffle henley, a garment quickly becoming his trademark. I was the 10-billionth journalist he spoke to this summer. As we sat down in a soundproof room, I apologized for making him do yet one more interview.

He smiled and said, “It’s really nice to meet you.”

On Kornfield: “Someone said to me after that talk, ‘You know, I came in really nervous about the fact that OpenAI was gonna make all of these decisions about the values in the AI, and you convinced me that you’re not going to make those decisions,’ and I was like, ‘Great.’ And they’re like, ‘Nope, now I’m more nervous. You’re gonna let the world make these decisions, and I don’t want that.’”

Even Altman can feel it’s perverse that he’s on that stage answering questions about global values. “If I weren’t in on this, I’d be, like, Why do these fuckers get to decide what happens to me?” he said in 2016 to The New Yorker’s Tad Friend. Seven years and much media training later, he has softened his game. “I have so much sympathy for the fact that something like OpenAI is supposed to be a government project.”

The new nice-guy vibe can be hard to square with Altman’s will to power, which is among his most-well-established traits. A friend in his inner circle described him to me as “the most ambitious person I know who is still sane, and I know 20,000 people in Silicon Valley.”

Still, Altman took an aw-shucks approach to explaining his rise. “I mean, I am a midwestern Jew from an awkward childhood at best, to say it very politely. And I’m running one of a handful …” He caught himself. “You know, top few dozen of the most important technology projects. I can’t imagine that this would have happened to me.”

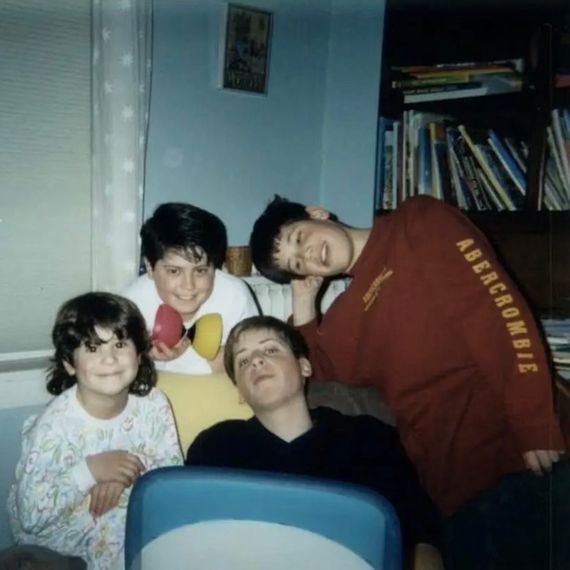

Altman grew up the oldest of four siblings in suburban St. Louis: three boys, Sam, Max, and Jack, each two years apart, then a girl, Annie, nine years younger than Sam. If you weren’t raised in a midwestern middle-class Jewish family — and I say this from experience — it’s hard to imagine the latent self-confidence such a family can instill in a son. “One of the very best things my parents did for me was constant (multiple times a day, I think?) affirmations of their love and belief that I could do anything,” Jack Altman has said. The stores of confidence that result are fantastical, narcotic, weapons grade. They’re like an extra valve in your heart.

The story that’s typically told about Sam is that he was a boy genius — “a rising star in the techno whiz-kid world,” according to the St. Louis Post-Dispatch. He started fixing the family VCR at age 3. In 1993, for his 8th birthday, Altman’s parents — Connie Gibstine, a dermatologist, and Jerry Altman, a real-estate broker — bought him a Mac LC II. Altman describes that gift as “this dividing line in my life: before I had a computer and after.”

The Altman family ate dinner together every night. Around the table, they’d play games like “square root”: Someone would call out a large number. The boys would guess. Annie would hold the calculator and check who was closest. They played 20 Questions to figure out each night’s surprise dessert. The family also played Ping-Pong, pool, board games, video games, and charades, and everybody always knew who won. Sam preferred this to be him. Jack recalled his brother’s attitude: “I have to win, and I’m in charge of everything.” The boys also played water polo. “He would disagree, but I would say I was better,” Jack told me. “I mean, like, undoubtedly better.”

Sam, who is gay, came out in high school. This surprised even his mother, who had thought of Sam “as just sort of unisexual and techy.” As Altman said on a 2020 podcast, his private high school was “not the kind of place where you would really stand up and talk about being gay and that was okay.” When he was 17, the school invited a speaker for National Coming Out Day. A group of students objected, “mostly on a religious basis but also just, like, gay-people-are-bad basis.” Altman decided to give a speech to the student body. He barely slept the night before. The last lines, he said on the podcast, were “Either you have tolerance to open community or you don’t, and you don’t get to pick and choose.”

In 2003, just as Silicon Valley began roaring back from the dot-com bust, Altman enrolled at Stanford. That same year, Reid Hoffman co-founded LinkedIn. In 2004, Mark Zuckerberg co-founded Facebook. At that moment, the eldest son of a suburban Jewish family did not become an investment banker or a doctor. He became a start-up guy. His sophomore year, Altman and his boyfriend, Nick Sivo, began working on Loopt, an early geo-tracking program for locating your friends. Paul Graham and his wife, Jessica Livingston, among others, had just created Summer Founders Program as part of their venture firm, Y Combinator. Altman applied. He won a $6,000 investment and the chance to spend a few months in Cambridge, Massachusetts, in the company of like-minded nerds. Altman worked so hard that summer that he got scurvy.

Still, with Loopt, he did not particularly distinguish himself. “Oh, another smart young person!” said Hoffman, who until January sat on the OpenAI board, remembering his impressions of the young Altman. This was enough to raise $5 million from Sequoia Capital. But Loopt never caught on with users. In 2012, Altman sold the company to Green Dot for $43.4 million. Not even Altman considered this a success.

The Evolution of Sam Altman: From left: At 14 or 15 with his siblings in the 2000s. At 23, promoting Loopt at Apple WWDC in 2008. Photo: Courtesy of Annie Altman; CNET/YouTube. The Evolution of Sam Altman: From left: At 14 or 15 with his siblings in the 2000s. At 23, promoting Loopt at Apple WWDC in 2008. Photo: Courtesy of A... The Evolution of Sam Altman: From left: At 14 or 15 with his siblings in the 2000s. At 23, promoting Loopt at Apple WWDC in 2008. Photo: Courtesy of Annie Altman; CNET/YouTube.

“Failure always sucks, but failure when you’re trying to prove something really, really sucks,” Altman told me. He walked away “pretty unhappy” — but with $5 million, which he used, along with money from Peter Thiel, to launch his own venture fund, Hydrazine Capital. He also took a year off, read a stack of books, traveled, played video games, and, “like a total tech-bro meme,” he said, “was like, I’m gonna go to an ashram for a while, and it changed my life. I’m sure I’m still anxious and stressed in a lot of ways, but my perception of it is that I feel very relaxed and happy and calm.”

In 2014, Graham tapped Altman to take over as president of Y Combinator, which by that point had helped launch Airbnb and Stripe. Graham had described Altman in 2009 as among “the five most interesting start-up founders of the last 30 years” and, later, as “what Bill Gates must have been like when he started Microsoft … a naturally sort of formidable, confident person.”

While Altman was president of YC, the incubator fielded about 40,000 applications from new start-ups each year. It heard in-person pitches from 1,000 of those. A couple hundred got YC funding: usually around $125,000, along with mentoring and networking (which itself included weekly dinners and group office hours), in exchange for giving YC 7 percent of the company. Running YC could be viewed as either the greatest job in Silicon Valley or among the worst. From the perspective of VCs (some of whom, as one put it, spend a lot of time not working and instead “calling in rich” from their yachts), running YC is “spending half the year essentially like a camp counselor.”

Through much of his tenure, Altman lived with his brothers in either of his two houses in San Francisco, one in SoMa, the other in the Mission. He preached a gospel of ambition, insularity, and scale. He believed in the value of hiring from the network of people you already know. He believed in not caring too much what others think. “A big secret is that you can bend the world to your will a surprising percentage of the time — most people don’t even try,” he wrote on his blog. “The most successful founders do not set out to create companies. They are on a mission to create something closer to a religion, and at some point it turns out that forming a company is the easiest way to do so.” He believed the greater downside is cornering yourself with a small idea, not thinking big enough.

Altman’s life was pretty great. He grew extremely rich. He invested in boy-dream products, like developing a supersonic airplane. He bought a prepper house in Big Sur and stocked it with guns and gold. He raced in his McLarens.

He also embraced the techy-catnip utilitarian philosophy of effective altruism. EA justified making piles of money by almost any means necessary on the theory that its adherents knew best how to spend it. The ideology prioritized the future over the present and imagined a Rapture-esque singularity when humans and machines would merge.

In 2015, from deep within this framework, Altman co-founded OpenAI, as a nonprofit, with Elon Musk and four others — Ilya Sutskever, Greg Brockman, John Schulman, and Wojciech Zaremba. The 501(c)(3)’s mission: to create “a computer that can think like a human in every way and use that for the maximal benefit of humanity.” The idea was to build good AI and dominate the field before bad people built the bad kind. OpenAI promised to open-source its research in line with EA values. If anybody — or anybody they deemed “value aligned” and “safety conscious” — was poised to achieve AGI before OpenAI, they would assist that project instead of competing against it.

For several years, Altman kept his day job as YC president. He sent myriad texts and emails to founders each day, and he tracked how quickly people responded because, as he wrote on his blog, he believed response time was “one of the most striking differences between great and mediocre founders.” In 2017, he considered running for California governor. He had been at a dinner party “complaining about politics and the state, and someone was like, ‘You should stop complaining and do something about it,’” he told me. “And I was like, ‘Okay.’” He published a platform, the United Slate, outlining three core principles: prosperity from technology, economic fairness, and personal liberty. Altman abandoned his bid after a few weeks.

Early in 2018, Musk tried to take control of OpenAI, claiming that the organization was falling behind Google. By February, Musk walked away, leaving Altman in charge.

Several months later, in late May, Altman’s father had a heart attack, at age 67, while rowing on Creve Coeur Lake outside St. Louis. He died at the hospital soon after. At the funeral, Annie told me, Sam allotted each of the four Altman children five minutes to speak. She used hers to rank her family members in terms of emotional expressivity. She put Sam, along with her mother, at the bottom.

Altman published an essay called “Moore’s Law for Everything” in March 2021. The piece begins, “My work at OpenAI reminds me every day about the magnitude of the socioeconomic change that is coming sooner than most people believe … If public policy doesn’t adapt accordingly, most people will end up worse off than they are today.”

Moore’s Law, as it applies to microchips, states that the number of transistors on a chip doubles roughly every two years while the price falls by half. Moore’s Law for Everything, as proposed by Altman, postulates “a world where, for decades, everything — housing, education, food, clothing, etc. — became half as expensive every two years.”

By the time Altman wrote this, he had left YC to focus on OpenAI full time. One of the first things the company did under his leadership, in the spring of 2019, was create a for-profit subsidiary. Building AI proved to be wildly expensive; Altman needed money. By summer, he’d raised a billion dollars from Microsoft. Some employees quit, upset at the mission creep away from “the maximal benefit of humanity.” Yet the switch ruffled surprisingly few.

“What, Elon, who is like a hundred-billionaire, is gonna be like, ‘Naughty Sam’?” a friend in Altman’s inner circle said. Altman declined to take equity in the company, and OpenAI initially capped profits of its investors at 100x. But many viewed this as an optics move. A billion times a hundred is a lot of money. “If Elizabeth Warren comes and says, like, ‘Oh, you turned this into a for-profit, you evil tech person,’” Altman’s friend said, “everybody in tech is going to just be like, ‘Go home.’”

Altman continued racing his cars (among his favorites: the Lexus LFA, which was discontinued by 2013 and, according to HotCars, “set you back by at least $950,000”). In the early days of the pandemic, he wore his Israeli Defense Forces gas mask. He bought a ranch in Napa. (Altman is a vegetarian, but his partner, Oliver Mulherin, a computer programmer from Melbourne, “likes cows,” Altman says.) He purchased a $27 million house on San Francisco’s Russian Hill. He racked up fancy friends. Diane von Furstenberg described him, in 2021, as “one of my most recent, very, very intimate friends. Meeting Sam is a little bit like meeting Einstein.”

Meanwhile, as OpenAI started selling access to its GPT software to businesses, Altman gestated a clutch of side projects, preparing for an AI-transformed world. He invested $375 million in Helion Energy, a speculative nuclear-fusion company. If Helion works — a long shot — Altman hopes to control one of the world’s cheapest energy sources. He invested $180 million in Retro Biosciences. The goal is to add ten years to the human life span. Altman also conceived and raised $115 million for Worldcoin, a project that is scanning people’s irises across the globe by having them look into a sphere called an Orb. Each iris print is then linked to a crypto wallet into which Worldcoin deposits currency. This would solve two AI-created problems: distinguishing humans from nonhumans, necessary once AI has further blurred the line between them, and doling back out some capital once companies like OpenAI have sucked most of it up.

This is not the portfolio of a man with ambitions like Zuckerberg, who appears, somewhat quaintly compared with Altman, to be content “with building a city-state to rule over,” as the tech writer and podcaster Jathan Sadowski put it. This is the portfolio of a man with ambitions like Musk’s, a man taking the “imperialist approach.” “He really sees himself as this world-bestriding Übermensch, as a superhuman in a really Nietzschean kind of way,” Sadowski said. “He will at once create the thing that destroys us and save us from it.”

Then, on November 30, 2022, OpenAI released ChatGPT. The software drew 100 million users in two months, becoming the greatest product launch in tech history. Two weeks earlier, Meta had released Galactica, but the company took it down after three days because the bot couldn’t distinguish truth from falsehood. ChatGPT also lied and hallucinated. But Altman released it anyway and argued this was a virtue. The world needs to get used to this. We need to make decisions together.

“Sometimes amorality is what distinguishes a winning CEO or product over the rest,” a former colleague who worked alongside Altman during OpenAI’s first years told me. “Facebook wasn’t technically that interesting, so why did Zuck win?” He could “scale faster and build products without getting caught up in the messiness.”

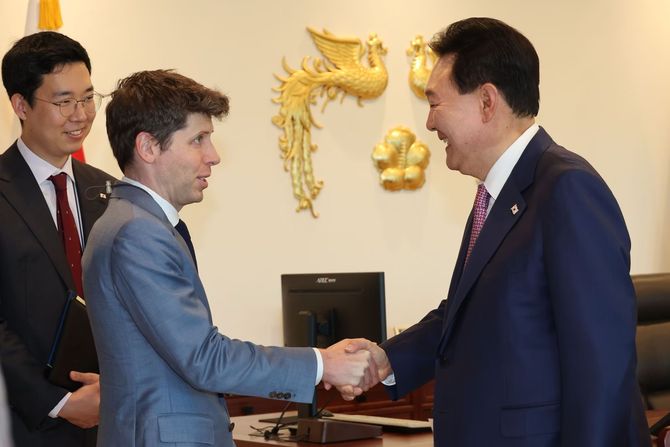

In May 2023, Altman embarked on a 22-country, 25-city world tour. This started, supposedly, as a chance to meet ChatGPT users but turned into a kind of debutante party. Often in a suit but sometimes in his gray henley, Altman presented himself to diplomats as the inevitable new tech superpower. He met with British prime minister Rishi Sunak, French president Emmanuel Macron, Spanish prime minister Pedro Sánchez, German chancellor Olaf Scholz, Indian prime minister Narendra Modi, South Korean president Yoon Suk-yeol, and Israeli president Isaac Herzog. He stood for a photo with European Commission president Ursula von der Leyen. In it, she looks elegant and unimpressed, he looks like Where’s Waldo? — his phone visible in his front pant pocket, his green eyes bugging on exhaustion and cortisol.

Then Altman returned home and appeared to unpack not just his wardrobe but his psyche. From late June through mid-August, he tweeted a lot. If you were hoping to understand him, this was gold.

is the move tonight barbie or oppenheimer?

Altman posted a poll. Barbie lost 17 percent to 83 percent.

ok going with OPpENhAImer.

The next morning, Altman returned to express his disappointment.

i was hoping that the oppenheimer movie would inspire a generation of kids to be physicists but it really missed the mark on that.

let’s get that movie made!

(i think the social network managed to do this for startup founders.)

A careful reader of the Altman oeuvre would be confused. For many years, Altman had been drawing parallels between himself and the bomb-maker. He had noted for reporters that he and Oppenheimer shared a birthday. He had paraphrased Oppenheimer to Cade Metz at the New York Times: “Technology happens because it’s possible.” Altman could not have been surprised, then, that Christopher Nolan, in his biopic, did not create a work of boosterism. Oppenheimer battled shame and regret in the back half of his life for his role in creating the atomic bomb. “Now I am become Death, the destroyer of worlds” — this is both the most famous line in the Bhagavad Gita and what Oppenheimer told NBC News was in his mind during the Trinity test. (It’s also in the film, twice.)

Altman had been linking himself with Oppenheimer throughout his world tour as he discussed (in nonspecific terms) the existential risk posed by AI and argued (very specifically) for a regulatory agency modeled after the International Atomic Energy Agency. The United Nations ratified the IAEA in 1957, four years after it was conceived. The agency’s mandate — to work toward international peace and prosperity — sounded like a great analog to a casual listener. It annoyed experts to no end.

One critique was about its political cynicism. “You say, ‘Regulate me,’ and you say, ‘This is a really complex and specialized topic, so we need a complex and specialized agency to do it,’ knowing damn well that that agency will never get created,” Sadowski said. “Or if something does get created, hey, that’s fine, too, because you built the DNA of it.”

Another problem is the vagueness. As Heidy Khlaaf, an engineer who specializes in evaluating and verifying the safety protocols for drones and large nuclear-power plants, explained to me, to mitigate risks from a technology, you need to define, with precision, what that technology is capable of doing, how it can help and hurt society — and Altman sticks to generalities when he says AI might annihilate the world. (Maybe someone will use AI to invent a superbug; maybe someone will use AI to launch nukes; maybe AI itself will turn against humans — the solutions for each case are not clear.) Furthermore, Khlaaf argues, we don’t need a new agency. AI should be regulated within its use cases, just like other technologies. AI built using copyrighted material should be regulated under copyright law. AI used in aviation should be regulated in that context. Finally, if Altman were serious about stringent safety protocols, he would be taking what he considers to be the smaller harms far more seriously.

“If you can’t even keep your system from discriminating against Black people” — a phenomenon known as algorithmic bias that impacts everything from how job candidates are sorted to which faces are labeled as most attractive — “how are you going to stop it from destroying humanity?” Khlaaf asked. Harm compounds in engineering systems. “A small software bug can wipe out the electric grid in New York.” Trained engineers know that. “Every single one of those companies, every single top contender in AI, has the resourcing and the fundamental engineering understanding to figure out how to reduce harms in these systems. Electing not to do it is a choice.”

The same day as Altman’s Oppenheimer-Barbie poll, he also posted:

everything ‘creative’ is a remix of things that happened in the past, plus epsilon and times the quality of the feedback loop and the number of iterations.

people think they should maximize epsilon but the trick is to maximize the other two.

OpenAI had come under increasing pressure throughout the summer and fall for allegedly training its models — and making money — on datasets filled with stolen, copyrighted work. Michael Chabon organized a class-action suit after learning his books were used without permission to teach ChatGPT. The Federal Trade Commission launched an investigation into the company’s alleged extensive violation of consumer-protection laws. Now Altman was arguing that creativity doesn’t really exist. Whatever the striking writers or pissed-off illustrators might think about their individuality or their worth, they’re just remixing old ideas. Much like OpenAI’s products.

In terms of diction, the math lingo lent a veneer of surety. Mathiness, a term coined in 2015 by Nobel Prize–winning economist Paul Romer, describes mathematical language used not to clarify but to mislead. “The beauty of mathematical language is its capacity to convey truths about the world in glaringly simple terms — E = MC2,” Noah Giansiracusa, a math and data-science professor at Bentley University, told me. “I read his tweet over and over and still don’t really know how to parse it or what exactly he’s trying to say.”

Giansiracusa converted Altman’s words into symbols. “Using C for things creative, R for remix of past things, Q for quality of the feedback loop, N for number of iterations, is he saying C = R + epsilon*Q*N or C = (R + epsilon)*Q*N?” Altman’s phrasing does not make the order of operations clear, Giansiracusa said. “Does the ‘and N’ — and the number of iterations — mean something other than multiplication? Or …”

Altman attracts haters. “It’s like a ’90s movie about a kid who’s been transported into the body of an adult and then has to pretend and hope nobody notices, you know?” Malcolm Harris, author of Palo Alto, told me. “Like he’s like the kid who can throw a fastball a million miles an hour because his arm was broken and came back together wrong, and now he’s Rookie of the Year and a major-league pitcher, but he’s also 12 years old and doesn’t know how to do anything.”

“He’s smart, like for a flyover-state community college,” said a Bay Area VC. “Do you watch Succession? You could make a Tom analogy.”

Some of the shade is, undoubtedly, jealousy. Some is a reaction to Altman’s midwestern nice. But mostly it is grounded in deep anger that tech culture has re-entrenched in its white-male clubbiness. “You know, we” — women — “got in the room for a second and then as soon as we started actually speaking, they were like, ‘GTFO,’” Meredith Whittaker, Signal president and a former Google whistleblower, told me. The only choice now is to exert pressure from the outside. “I want him pressed. Like, stand there and look someone in the eyes and say this shit,” Whittaker continued. “What we’re talking about is laying claim to the creative output of millions, billions of people and then using that to create systems that are directly undermining their livelihoods.” Do we really want to take something as meaningful as artistic expression and “spit it back out as derivative content paste from some Microsoft product that has been calibrated by precarious Kenyan workers who themselves are still suffering PTSD from the work they do to make sure it fits within the parameters of polite liberal dialogue?”

Many, of course, are happy to return to conservative values under the rationale that it’s good business and feels like winning. “I definitely think there’s this tone of, like, ‘I told you so,’” another person close to Altman’s inner circle told me about the state of the industry. “Like, ‘You worried about all of this bullshit’” — this “bullshit” being diversity, equity, and inclusion — “and like, ‘Look where that got you.’” Tech layoffs, companies dying. This is not the result of DEI, but it’s a convenient excuse. The current attitude is: Woke culture peaked. “You don’t really need to pretend to care anymore.”

A Black entrepreneur — who, like almost everybody in tech I spoke to for this article, didn’t want to use their name for fear of Altman’s power — told me they spent 15 years trying to break into the white male tech club. They attended all the right schools, affiliated with all the right institutions. They made themselves shiny, successful, and rich. “I wouldn’t wish this on anybody,” they told me. “Elon and Peter and all of their pals in their little circle of drinking young men’s blood or whatever it is they do — who is going to force them to cut a tiny slice, any slice of the pie, and share when there’s really no need, no pressure? The system works fine for the people for whom it was intended to work.”

The rest of us will be treated how we’re being treated now: with, as the entrepreneur said, “clear and lasting disregard.”

Families replicate social dynamics. Power differentials hurt and often explode.

This is true of the Altmans. Jerry Altman’s 2018 death notice describes him as: “Husband of Connie Gibstine; dear father and father-in-law of Sam Altman, Max Altman, Jack (Julia) Altman” — Julia is Jack’s wife — “and Annie Altman …”

Annie Altman? Readers of Altman’s blog; his tweets; his manifesto, Startup Playbook; along with the hundreds of articles about him will be familiar with Jack and Max. They pop up all over the place, most notably in a dashing photo in Forbes, atop the profile that accompanied the announcement of their joint fund, Apollo. They’re also featured in Tad Friend’s 2016 Altman profile in The New Yorker and in much chummy public banter.

@jaltma: I find it really upsetting when I see articles calling Sam a tech bro. He’s a technology brother.

@maxaltman: He *is* technology, brother.

@sama: love you, (tech) bros

Annie does not exist in Sam’s public life. She was never going to be in the club. She was never going to be an Übermensch. She’s always been someone who felt the pain of the world. At age 5, she began waking up in the middle of the night, needing to take a bath to calm her anxiety. By 6, she thought about suicide, though she didn’t know the word.

She often introduced herself to people in elevators and grocery stores: “I’m Annie Francis Altman. What’s your name?” (Of Sam, she told me, “He’s probably autistic also, but more of the computer-math way. I’m more of the humanity, humanitarian, justice-y way.”) Like her eldest brother, she is extremely intelligent, and like her eldest brother, she left college early — though not because her start-up was funded by Sequoia. She had completed all of her Tufts credits, and she was severely depressed. She wanted to live in a place that felt better to her. She wanted to make art. She felt her survival depended on it. She graduated after seven semesters.

When I visited Annie on Maui this summer, she told me stories that will resonate with anyone who has been the emo-artsy person in a businessy family, or who has felt profoundly hurt by experiences family members seem not to understand. Annie — her long dark hair braided, her voice low, measured, and intense — told me about visiting Sam in San Francisco in 2018. He had some friends over. One of them asked Annie to sing a song she’d written. She found her ukulele. She began. “Midway through, Sam gets up wordlessly and walks upstairs to his room,” she told me over a smoothie in Paia, a hippie town on Maui’s North Shore. “I’m like, Do I keep playing? Is he okay? What just happened?” The next day, she told him she was upset and asked him why he left. “And he was kind of like, ‘My stomach hurt,’ or ‘I was too drunk,’ or ‘too stoned, I needed to take a moment.’ And I was like, ‘Really? That moment? You couldn’t wait another 90 seconds?’”

That same year, Jerry Altman died. He’d had his heart issues, along with a lot of stress, partly, Annie told me, from driving to Kansas City to nurse along his real-estate business. The Altmans’ parents had separated. Jerry kept working because he needed the money. After his death, Annie cracked. Her body fell apart. Her mental health fell apart. She’d always been the family’s pain sponge. She absorbed more than she could take now.

Sam offered to help her with money for a while, then he stopped. In their email and text exchanges, his love — and leverage — is clear. He wants to encourage Annie to get on her feet. He wants to encourage her to get back on Zoloft, which she’d quit under the care of a psychiatrist because she hated how it made her feel.

Among her various art projects, Annie makes a podcast called All Humans Are Human. The first Thanksgiving after their father’s death, all the brothers agreed to record an episode with her. Annie wanted to talk on air about the psychological phenomenon of projection: what we put on other people. The brothers steered the conversation into the idea of feedback — specifically, how to give feedback at work. After she posted the show online, Annie hoped her siblings, particularly Sam, would share it. He’d contributed to their brothers’ careers. Jack’s company, Lattice, had been through YC. “I was like, ‘You could just tweet the link. That would help. You don’t want to share your sister’s podcast that you came on?’” He did not. “Jack and Sam said it didn’t align with their businesses.”

On the first anniversary of Jerry Altman’s death, Annie had the word sch’ma — “listen” in Hebrew — tattooed on her neck. She quit her job at a dispensary because she had an injured Achilles tendon that wouldn’t heal and she was in a walking boot for the third time in seven years. She asked Sam and their mother for financial help. They refused. “That was right when I got on the sugar-dating website for the first time,” Annie told me. “I was just at such a loss, in such a state of desperation, such a state of confusion and grief.” Sam had been her favorite brother. He’d read her books at bedtime. He’d taken portraits of her on the monkey bars for a high-school project. She’d felt so understood, loved, and proud. “I was like, Why? Why are these people not helping me when they could at no real cost to themselves?”

In May 2020, she relocated to the Big Island of Hawaii. One day, shortly after she’d moved to a farm to do a live-work trade, she got an email from Sam asking for her address. He wanted to send her a memorial diamond he’d made out of some of their father’s ashes. “Picturing him sending a diamond of my dad’s ashes to the mailbox where it’s one of those rural places where there are all these open boxes for all these farms … It was so heavy and sad and angering, but it was also so hilarious and so ridiculous. So disconnected-feeling. Just the lack of fucks given.” Their father never asked to be a diamond. Annie’s mental health was fragile. She worried about money for groceries. It was hard to interact with somebody for whom money meant everything but also so little. “Like, either you aren’t realizing or you are not caring about this whole situation here,” she said. By “whole situation,” she meant her life. “You’re willing to spend $5,000 — for each one — to make this thing that was your idea, not Dad’s, and you’re wanting to send that to me instead of sending me $300 so I can have food security. What?”

The two are now estranged. Sam offered to buy Annie a house. She doesn’t want to be controlled. For the past three years, she has supported herself doing sex work, “both in person and virtual,” she told me. She posts porn on OnlyFans. She posts on Instagram Stories about mutual aid, trying to connect people who have money to share with those who need financial help.

She and Altman are black-mirror opposites. Altman jokes about becoming the world’s first trillionaire, someone he knows socially told me. (Altman disputes this and asked me include this statement: “I do not want to be the world’s first trillionaire.”) He has dedicated himself to building software to replicate — and surpass — human intelligence through stolen data and daisy chains of GPUs.

Annie has moved more than 20 times in the past year. When she called me in mid-September, her housing was unstable yet again. She had $1,000 in her bank account.

Since 2020, she has been having flashbacks. She knows everybody takes the bits of their life and arranges them into narratives to make sense of their world.

As Annie tells her life story, Sam, their brothers, and her mother kept money her father left her from her.

As Annie tells her life story, she felt special and loved when, as a child, Sam read her bedtime stories. Now those memories feel like abuse.

The Altman family would like the world to know: “We love Annie and will continue our best efforts to support and protect her, as any family would.”

Annie is working on a one-woman show called the HumAnnie about how nobody really knows how to be a human. We’re all winging it.

On June 22, 2023, Altman put on a tux and went with his partner, Oliver Mulherin, to a White House dinner.

He did seem like a character caught in a ’90s time-travel movie, a body too small and too young for all the power it was supposed to hold. But he was mostly pulling it off. His tux looked sharp, a positive distinction from Silicon Valley’s other notable Sam, Sam Bankman-Fried, whose slovenly aesthetic now seems like evidence of moral slobbery. Since Altman had taken over as OpenAI’s CEO, the company had not only diluted its nonprofit status. It had quit being very open, quit releasing its training data and source code, quit making much of its technology possible for others to analyze and build upon. Quit working “for the maximal benefit of humanity.” But what could anybody do? In Munich, on his world tour, Altman asked an auditorium full of people if they wanted OpenAI to open-source its next-generation LLM, GPT-5, upon its release.

The crowd responded with a resounding yes.

Altman said, “Whoa, we’re definitely not going to do that, but that’s interesting to know.”

In his office in August, Altman was still hitting his talking points. I asked him what he’d done in the past 24 hours. “So one of the things I was working on yesterday is: We’re trying to figure out if we can align an AI to a set of human values. We’ve made good progress there technically. There’s now a harder question: Okay, whose values?”

He’d also lunched with the mayor of San Francisco, tried to whittle down his 98-page to-do list, and lifted weights (though, he said with some resignation, “I’ve given up on trying to get really jacked”). He welcomed new employees. He ate dinner with his brothers and Ollie. He went to bed at 8:45 p.m.

It’s disorienting, the imperialist cloaked in nice. One of Altman’s most treasured possessions, he told me, is the mezuzah his grandfather carried in his pocket his whole life. He and Ollie want to have kids soon; he likes big families. He laughs so hard, on occasion, he has to lie down on the floor to breathe. He’s “going to try to find ways to get the will of the people into what we built.” He knows “AI is not a clean story of only benefit,” “Stuff is going to be lost here,” and “It’s super-relatable and natural to have loss aversion. People don’t want to hear a story in which they’re the casualty.”

His public persona, he said, is “only a tangential match to me.”

Thank you for subscribing and supporting our journalism. If you prefer to read in print, you can also find this article in the September 25, 2023, issue of New York Magazine.

Want more stories like this one? Subscribe now to support our journalism and get unlimited access to our coverage. If you prefer to read in print, you can also find this article in the September 25, 2023, issue of New York Magazine.

The one story you shouldn’t miss today, selected by New York’s editors. Vox Media, LLC Terms and Privacy Notice

By submitting your email, you agree to our Terms and Privacy Notice and to receive email correspondence from us. See All